-

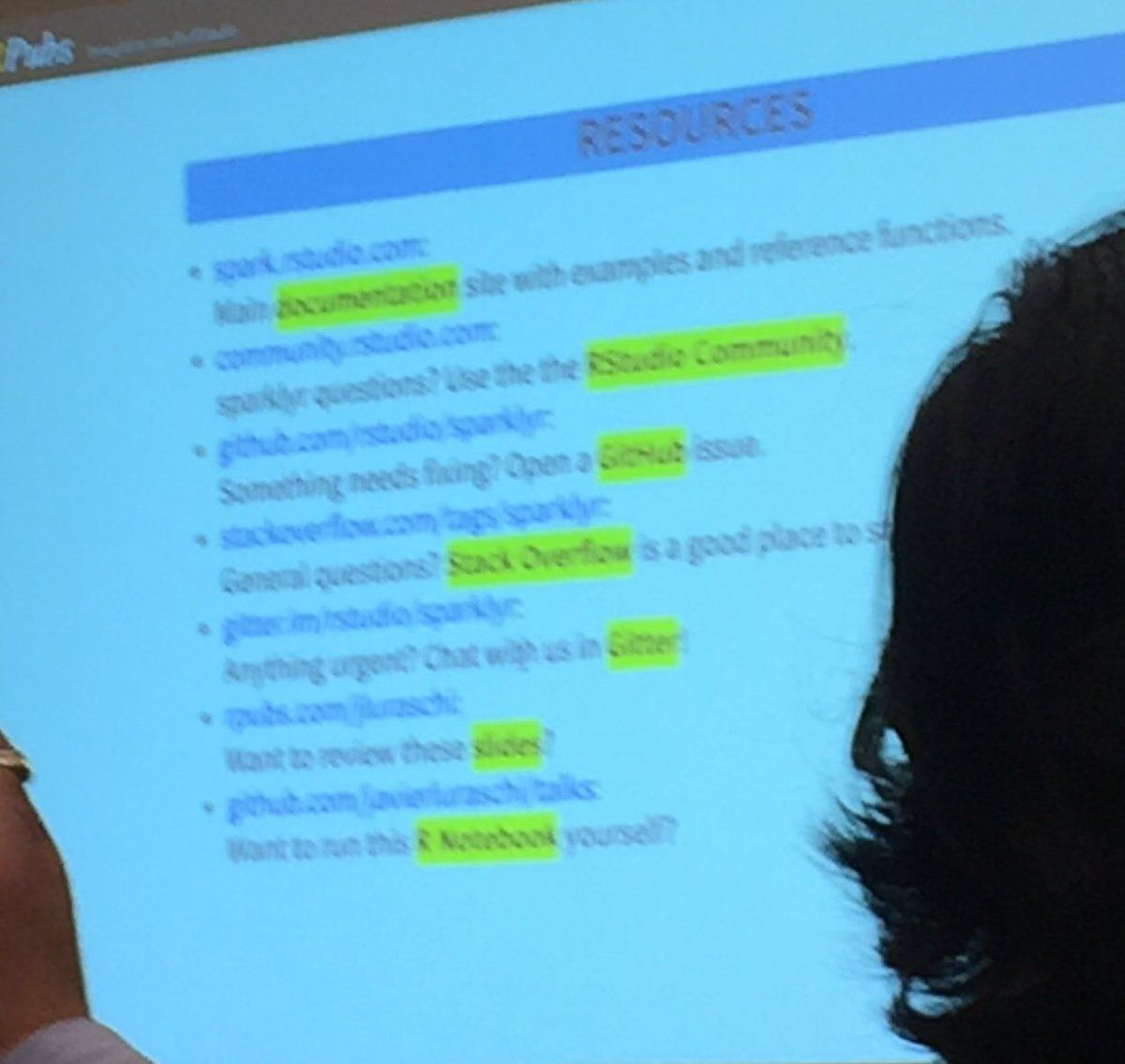

@javierluraschi talking about cluster computing made easy with spark and R. #Cascadiarconf

-

@javierluraschi How much information exists in the world? Digital information has overtaken analog information. #Cascadiarconf

-

@javierluraschi How did google process so much information? MapReduce. Split data up and process in parallel (map), and summarize (Reduce). #Cascadiarconf

-

@javierluraschi Hadoop was initial implementation. Everything was disk-based and slow. Apache Spark works in memory, and is faster. #Cascadiarconf

-

@javierluraschi What can you do with cluster computing? Deep learning algorithms need distributed computing now. #Cascadiarconf

-

@javierluraschi What to do with your slow code? Different approaches: 1) usually can sample data. 2) Use profviz to profile bottlenecks in code. 3) Get a bigger computer. 4) Use SparklyR and scale out computational problems #Cascadiarconf

-

@javierluraschi sparklyr package. Use spark_connect() to connect to spark cluster. spark_read commands to read data in, use dplyr syntax or SQL statements, can use machine learning and modeling such as linear regression. #Cascadiarconf

-

@javierluraschi Multiple packages use sparklyr to do more sophisticated analysis, such as graphical analysis. Real-time data can be represented as streams. Structured streams allow for parallel processing. #Cascadiarconf

-

@javierluraschi Can use SQL and dplyr with streams. Can't train on realtime data, but can train on static data, and get scores from data streams. Can use streams as shiny inputs. Ooooohhhh. #Cascadiarconf

-

@javierluraschi Spark, Kafka (handles temporary real-time data), and Shiny can work together. Oooooohhhh, neat. #Cascadiarconf

-

@javierluraschi I am laughing really loud that @javierluraschi admitted that he has volumes of Knuth's "Art of Programming" but hasn't read them. #Cascadiarconf

-

@javierluraschi @javierluraschi now demonstrating how streams work in Kafka by pulling #Cascadiarconf from twitter and recomputing in RNotebook.

-

@javierluraschi He's now pulling images from these tweets using googleAuthR, and classifying them with googleVision. In real time. #Cascadiarconf